Introduction

In today’s always-connected world, digital infrastructure is no longer optional it’s foundational. From edge computing to real-time data syncing, businesses require efficient, secure, scalable systems to operate at full capacity. Whether you’re running high-performance cloud applications, building decentralized platforms, or managing real-time data delivery between devices, the tools we rely on must be reliable and resilient.

A term that is becoming more common in industry talks is “eporer” a type of high-performance system or solution, depending on the situation, often linked to mixed digital operations, improved transfer methods, or data routing While not yet a mainstream platform or brand, eporer is gaining traction within niche communities interested in smarter, leaner infrastructure design.

This in-depth guide explores ten pillars central to modern tech architecture and how solutions classified under or like “eporer” could define productivity, stability, and speed for the next generation of digital services.

The Shift Toward Edge-Optimized Infrastructure

In 2026, edge computing will continue to grow, shifting data processing away from core cloud centers and closer to the devices that generate it. This is essential for IoT, gaming, health tech, autonomous vehicles, and any industry where milliseconds matter.

Why edge matters more in 2026:

- Reduced latency (under 15ms on average for mid-range networks)

- Cost-effective bandwidth usage

- Enhanced privacy control (data remains closer to origin)

- Local resiliency during main cloud outages

| Centralized Cloud | Edge Computing |

| High latency | Near-zero latency |

| Dependent on stable internet | Auto-redundancy |

| High data egress fees | Less external transfer needed |

Solutions like those described under the emperor framework leverage these qualities to move enterprise processing closer to consumer touchpoints.

Intelligent Data Routing: Dynamic Path Selection Models

Traditional routing relies on static routes and ephemeral connection layers. In fast-paced, layered networks, however, intelligent routing based on real-time traffic, data sensitivity, and load balancing is now in demand.

Features of intelligent routing:

- Context-aware protocol switching

- Geo-optimized handshakes

- Behavioral learning from traffic patterns

- Failover systems with no downtime loss

Explorer-like systems inject intelligent path selection methods into middleware, allowing faster transitions and data prioritization per packet. This brings significant improvements in sectors like gaming and AI training.

The Importance of Data Compression and Storage Efficiency

With content throughput reaching new highs globally, compression-related optimizations have become a central R&D theme again in 2026.

Modern compression models include:

- Contextual model-based binary suppression

- Encrypted-compression hybrid layers

- Device-tailored decompression indexes

| Compression Technique | Avg. Compression Ratio | Ideal Use Case |

| LZMA2 | 30–45% | Archival platforms |

| Brotli | 25–40% | Web APIs & microservices |

| Adaptive Huffman | 40–55% | Edge devices w/ storage limits |

Systems aligned with the eporer mindset often default to high-efficiency compression without admin-side performance trade-offs, allowing savings in both compute and storage environments.

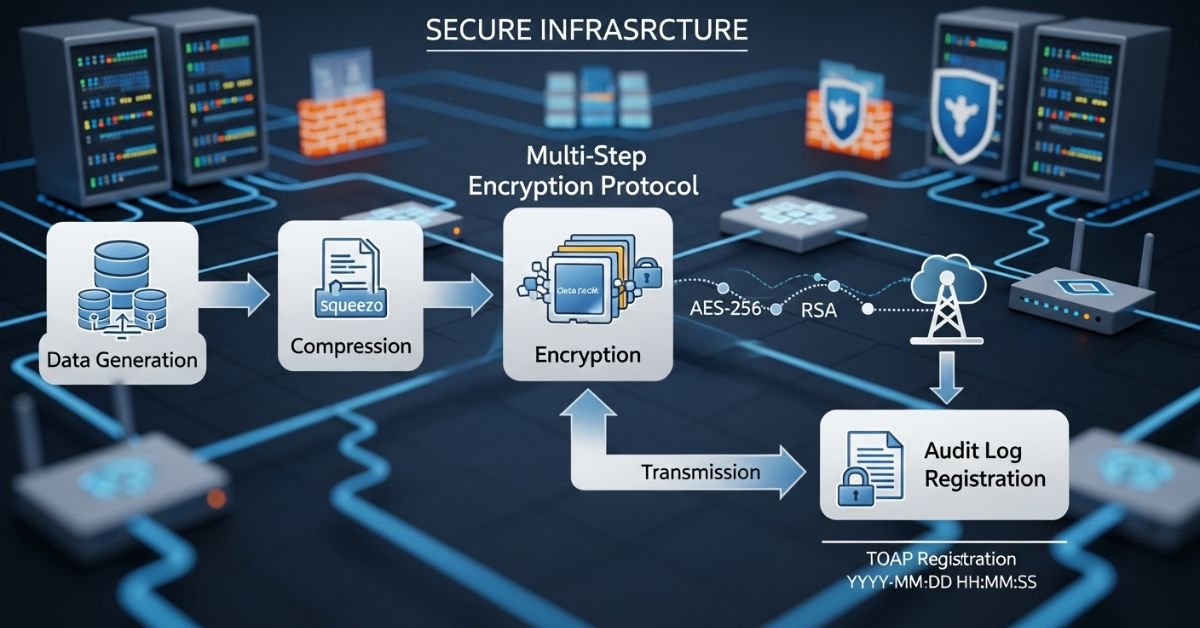

Cybersecurity Integration From the Ground Up

One of the strongest mandates in infrastructure today is security by design. Infosec is shifting from perimeter-based defenses to embedded protections in APIs, user sessions, and even compression streams.

Current best practices:

- Public key rotation with session-based pairing

- Signature validation per data block

- Containerization of microservices

- On-device credentials hashed with biometric pinning

A modern platform like eporer assumes encryption and behavioral anomaly tagging are not added technologies, they’re structural features.

Real-Time Analytics Without Overhead

Fast environments generate fast data but most platforms fall behind when aggregating millions of points per second. The solution? The solution lies in integrated, lean analytics engines that are situated close to the source.

Features of real-time analytics platforms:

- ML-ready stream parsing

- Multi-tenant style memory isolation

- Fraud or behavior alerts in <1s execution time

- Built-in data lifecycle management (auto discard, log aging)

Explorer-aligned systems filter, sort, and flag high-throughput data on edge nodes, preventing flooding on back-end APIs and giving developers real-time instrumentation abilities.

AI-Ready Operations and Smart Observability Metrics

AI isn’t just a feature, it’s a system enabler. From predictive maintenance to anomaly detection, AI-powered observability inspects logs, traffic, metrics, and triggers countermeasures independently.

| Monitoring Tool | Form of Intelligence | Reaction Speed |

| Google Cloud AIOps | Rule-trained prediction | Milliseconds |

| NewRelic One ML+ | Anomaly correlation graph | Real-time alerts |

| Azure Insights AI | CPU/load regression analysis | Dynamic thresholds |

Error-based design logic integrates self-healing layers based on behaviors not just triggered logs which means tech teams intervene less, scale more, and resolve faster.

Fast API Gateways in Distributed Architectures

API gateways have become bottlenecks or accelerators. In app environments with layered APIs (authentication, billing, logging), latency climbs fast.

Success now depends on:

- Multi-region caching

- Trusted client token systems

- Payload compression routing

- Rate-limit shaping based on endpoint behavior

Solutions tailored in the spirit of eporer often preprocess handshake packets at the edge cutting attention-to-first-byte timing by 30–40%.

This directly improves mobile, gaming, fintech, and streaming stacks.

Multicloud and Interconnect Strategy

No organization relies on a single provider anymore. Multicloud operations allow failover, regional scaling, and workload customization.

Why multicloud is essential:

- Redundancy when a primary provider fails

- Pricing flexibility for different compute needs

- Regulatory advantage for data compliance

- Optimization of predictions from diverse AI models

However, it brings challenges in synchronization, policy configuration, and live monitoring, which infrastructures like eporer aim to address with simplified abstraction templates.

Open Standards vs. Proprietary Lock-In

Developers today demand open integrations that evolve with community feedback—not siloed systems.

| Platform Type | Pros | Cons |

| Open-source-first | Transparent, customizable, trusted | Requires management skills |

| Proprietary platforms | Feature-rich out of the box | Lock-in risk, limited editor access |

Whether it’s protocol flexibility (e.g., MQTT vs. HTTP2) or secure federated identity systems, eporer is gaining traction in circles that prioritize ecosystem agility above vendor polish.

Futureproofing Architecture: Harmonizing Speed, Trust, and Modularity

As the tech world grows more fragmented and swiftly evolving, solutions must maximize reusability, observability, and flexibility. Anything built without modular containers and transversal APIs won’t last beyond current cycles.

Next steps in infrastructure include:

- Adopt a zero-trust mindset.

- Enable daily observability snapshots

- Version your APIs like code branches.

- Prefer infrastructure-as-code backed by tests

Platforms that perform best are not the most expensive they’re the smartest deployed. And it’s here where the quiet but sophisticated frameworks such as “eporer” are making a long-term difference.

FAQs

What is a repository in technology?

Eporer refers to an emerging tech model or platform centered on optimizing data flow, compression, and edge infrastructure.

Is eporer a tool, platform, or protocol?

It can represent a system or framework approach currently ambiguous but tied to decentralized infrastructure themes.

Who uses emperor-type systems?

Enterprise teams in edge IoT, fintech, AI-backed logs, or high-throughput services use these systems.

Can the emperor improve real-time analytics latency?

Absolutely, especially when integrated with lightweight observability layers and efficient packet routing.

Is eporer open-source or commercial?

Details remain unclear; it may relate to private research or upcoming hybrid specifications.

Conclusion

In 2026, the tools behind our networks aren’t just enablers, they’re differentiators. Whether powering logistics, telehealth, industrial IoT, or machine learning pipelines, everything now hinges on delivery, latency, and adaptive flow.

Frameworks like Eporer (or those inspired by its design ethos) tackle this head-on. With efficient compression, intelligent routing, real-time parsing, and zero-trust deployment, such systems embody the future of high-throughput, cloud-hardened tech.